What is the Model Context Protocol (MCP)? A Beginner's Guide

So what does MCP stand for in AI? The Model Context Protocol (MCP) is a protocol that provides context to AI models. It's a fundamental shift in how we approach connecting AI systems to the real world.

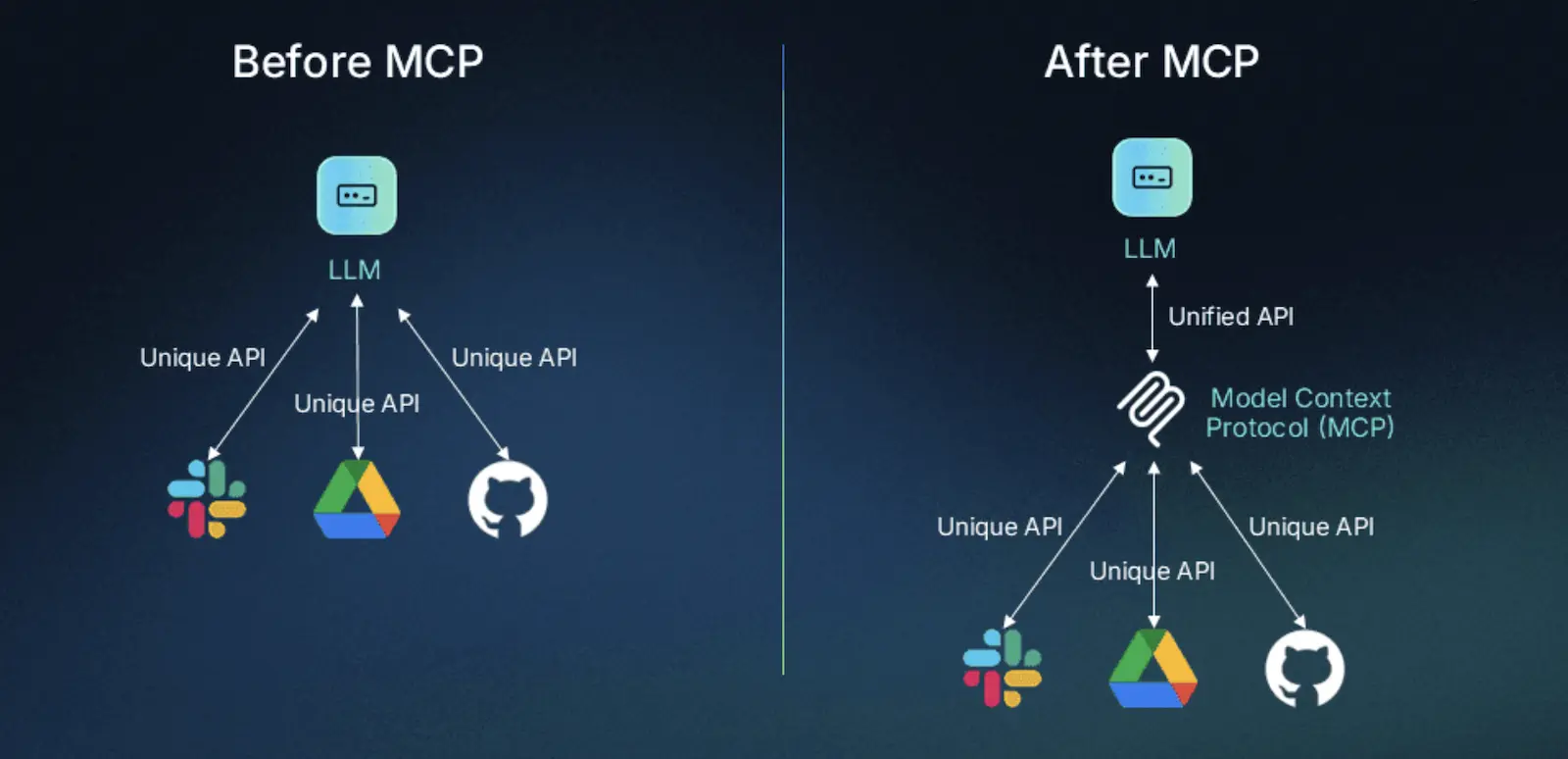

Imagine this: you're a developer, staring at your screen, a brilliant idea for an AI application brewing in your mind. You want it to connect to your company's Slack, sift through GitHub issues, pull data from Google Drive, and query a PostgreSQL database. But then, the reality hits you. The sheer amount of custom code, the tangled mess of APIs, the endless authentication hoops - it's like needing a different key for every single door in a skyscraper. It's not just inefficient. It's a creativity killer.

This isn't just a hypothetical scenario. It's the daily reality for countless developers. It's a problem that has, for a long time, kept AI's true potential locked away. We have these incredibly powerful large language models (LLMs), but they're like brilliant minds trapped in soundproof rooms, unable to interact with the world of data and tools that would make them truly transformative. Every connection is a custom job, a fragile piece of 'glue code' that threatens to break with every API update.

But what if there was a master key? A single, universal standard that could unlock all those doors? This is the 'aha!' moment that many in the tech community have experienced with the arrival of the Model Context Protocol (MCP). As one AWS developer put it, understanding MCP is 'like one of those rare 'aha!' moments you sometimes have in tech.' It's the moment you realize that the frustrating, fragmented world of AI integration doesn't have to be this way.

Announced by Anthropic in November 2024, MCP represents a fundamental shift in how we think about AI integration. Rather than building point-to-point connections between every AI application and every data source, MCP provides a universal protocol that standardizes these interactions. This means developers can build AI applications that seamlessly connect to multiple data sources without writing custom code for each integration, while data providers can expose their services to the entire AI ecosystem through a single, well-defined interface.

In this comprehensive guide, we'll explore everything you need to know about the Model Context Protocol. We'll start by examining the specific problems MCP solves, dive deep into how the protocol works, explore real-world use cases that demonstrate its power, and provide practical guidance for getting started with MCP in your own projects. Whether you're an AI developer looking to streamline your integrations, a product manager evaluating AI strategies, or a technical decision-maker planning your organization's AI roadmap, this guide will give you the knowledge you need to understand and leverage this transformative technology.

By the end of this article, you'll understand not just what MCP is, but why it represents such a significant advancement for the AI industry and how it can help you build more powerful, maintainable, and scalable AI applications. Let's begin by examining the integration challenges that made MCP necessary in the first place.

The Problem: When AI Feels Like a Brilliant Colleague Locked in a Room

Let me tell you about Alexey, a developer who perfectly captured the frustration many of us feel with AI integration. When he first started working with AI assistants like Claude, his workflow was, in his words, "fragmented and inefficient." He'd draft content in his text editor, copy portions into a chat interface, review the AI's suggestions, manually copy the useful parts back to his document, and repeat. "It was functional but tedious," he recalls, "with constant context-switching that interrupted my creative flow."

This is the reality for most of us working with AI today. We have these incredibly sophisticated models that can reason, analyze, and create, but they're essentially isolated from the very data and systems that could make them truly useful. It's like having a brilliant colleague who's locked in a soundproof room - they have all the knowledge and skills you need, but they can't see your work, access your files, or interact with your tools.

The current approach to solving this involves what developers call "glue code" - custom integrations that connect AI to specific services. But here's the catch: every new data source means starting from scratch. Want to connect to Slack? Write custom code. Need GitHub access? Another custom integration. Add Google Drive? Yet another bespoke solution. Each integration becomes a maintenance burden, a potential point of failure, and a barrier to innovation.

As organizations try to scale their AI initiatives, this approach becomes unsustainable. The math is brutal: five AI applications plus ten data sources potentially equals fifty different integrations, each with its own quirks, authentication methods, and failure modes. It's no wonder that many promising AI projects never make it past the prototype stage.

What is the Model Context Protocol?

Remember that AWS developer I mentioned earlier? When he first read about MCP, he admits he "wasn't exactly sure what it was." But once it clicked, he described it as giving "your AI assistant superpowers without needing to fully retrain it." That's the beauty of MCP - it's simultaneously simple in concept and revolutionary in impact.

The Model Context Protocol is, at its heart, "an open protocol that standardizes how applications provide context to large language models (LLMs)" [2]. But let's break that down into human terms. Imagine you have a brilliant research assistant who knows everything about everything, but they're working from a windowless office with no internet, no phone, and no access to your company's files. They can give you amazing advice based on what they already know, but they can't look up current information, check your calendar, or access your project files.

Now imagine giving that assistant a universal communication system - a protocol that lets them talk to any system in your organization through a single, standardized interface. Suddenly, they can check your Slack messages, review your GitHub repositories, analyze your Google Drive documents, and query your databases. They haven't become smarter in terms of raw intelligence, but they've become infinitely more useful because they can finally see and interact with your world.

This is exactly what the MCP protocol does for AI systems. It's like building a universal translator that allows any AI application to communicate with any data source or tool through a single, consistent language. Just as the USB-C standard eliminated the chaos of proprietary connectors and cables, the MCP protocol AI eliminates the chaos of custom AI integrations. Understanding how MCP protocol works is key to grasping why it's so revolutionary for connecting AI to real-world systems.

The Birth of a Standard

The story of MCP's creation is rooted in real frustration. As Anthropic's developers worked with their own AI systems, they kept running into the same wall: "even the most sophisticated models are constrained by their isolation from data - trapped behind information silos and legacy systems" [1]. They weren't just building a protocol for the sake of it. They were solving a problem they faced every day.

What makes MCP special is its commitment to openness. This isn't a proprietary solution designed to lock you into a specific vendor's ecosystem. It's an open standard that "everyone is free to implement and use" [2]. This openness is crucial because it means MCP can become a true industry standard rather than just another vendor-specific solution.

The protocol is also designed to be vendor-agnostic from the ground up. You can use MCP with Claude today, switch to a different AI model tomorrow, and your integrations will continue to work. It's like having a universal power adapter that works with any device, anywhere in the world.

How MCP Actually Works: The Magic Behind the Curtain

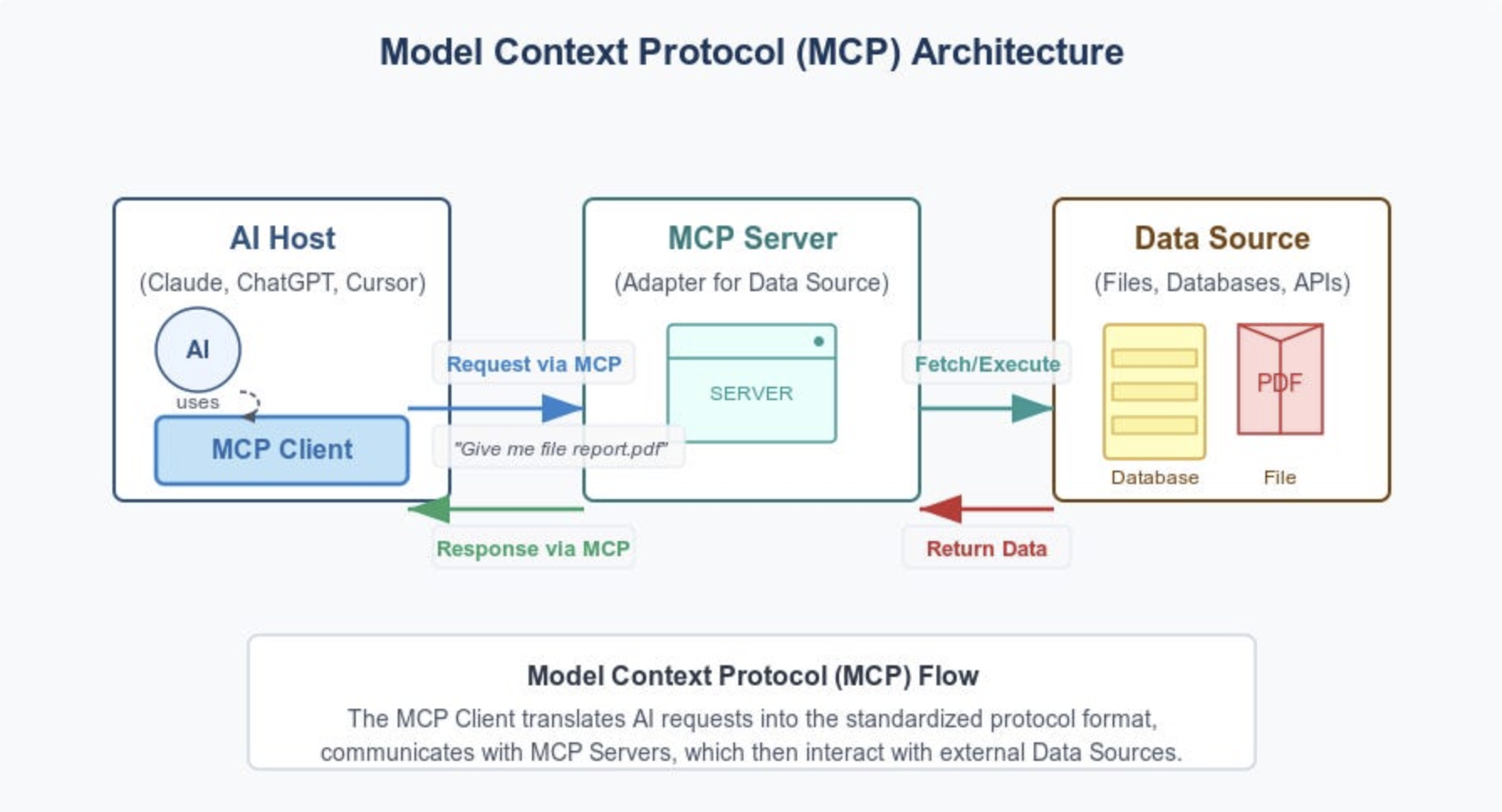

At its core, MCP implements what's called a client-server architecture, but don't let the technical jargon intimidate you. Think of it like a well-organized company with clear roles and responsibilities.

The MCP Host is like the CEO - it's the AI application (like Claude Desktop) that coordinates everything and makes the big decisions. The host doesn't do the detailed work itself. Instead, it delegates to specialized teams.

MCP Clients are like department managers. Each client maintains a dedicated relationship with one specific data source or service. They handle all the communication details, authentication, and data formatting so the host doesn't have to worry about these complexities.

MCP Servers are like specialized service providers. Each server is an expert in exposing a particular type of data or functionality - whether that's accessing Google Drive files, querying a PostgreSQL database, or interacting with the GitHub API. These servers speak the universal MCP language, so any MCP client can work with any MCP server.

This architecture creates what developers call "separation of concerns." The AI application focuses on being intelligent, the clients focus on communication, and the servers focus on data access. Nobody has to be an expert in everything, but everyone can work together seamlessly.

The Universal Interface Revolution

One of MCP's most powerful features is that it provides what we call a "universal interface." Instead of learning dozens of different APIs, authentication methods, and data formats, developers work with a single, consistent interface that handles all the underlying complexity.

Think about how transformative this is. A single AI application can seamlessly access Slack messages, GitHub repositories, Google Drive files, and PostgreSQL databases through the exact same interface, even though these services have completely different underlying APIs and data models. It's like having a universal remote control that works with every device in your house, regardless of brand or model.

This universal interface enables something that was previously very difficult: true composition and workflow capabilities. Because all data sources and tools are accessed through the same interface, it becomes much easier to build AI workflows that span multiple systems. An AI assistant can retrieve customer data from a CRM, analyze it using a machine learning model, generate a report, and save it to cloud storage - all through the same standardized interface.

The Growing Ecosystem: A Community Effort

What's particularly exciting about MCP is how quickly the ecosystem is growing. Anthropic didn't just release a specification and hope for the best. They launched with "an open-source repository of MCP servers" and provided "pre-built MCP servers for popular enterprise systems like Google Drive, Slack, GitHub, Git, Postgres, and Puppeteer" [1].

But the real magic is happening in the community. Developers around the world are building MCP servers for their favorite tools and services. Alexey, the developer I mentioned earlier, created a simple but powerful MCP server that leverages Mac's Spotlight database to search through files. With just a few lines of code, he extended Claude's capabilities to include searching through his entire filesystem.

The community aspect creates a virtuous cycle. As more organizations implement MCP servers for their services, the entire community benefits from access to those integrations. A developer building an AI application can leverage MCP servers built by others, while organizations can expose their services to the AI ecosystem by implementing MCP servers.

Early adopters are already seeing the benefits. Major companies like Block and Apollo have integrated MCP into their systems, while development tool companies including Zed, Replit, Codeium, and Sourcegraph are working with MCP to enhance their platforms [1]. This isn't just theoretical adoption, these are real companies using MCP to solve real problems.

Context-Aware AI: The Real Game Changer

Perhaps the most transformative aspect of MCP is how it enables truly context-aware AI applications. Traditional AI applications operate with limited context - they can only work with the information provided in a single conversation or document. MCP changes this fundamental limitation by allowing AI applications to dynamically access relevant context from multiple sources as needed.

This context-aware capability transforms AI from a static question-answering system into a dynamic agent that can gather information, perform actions, and adapt its behavior based on real-time data. An AI assistant with MCP access can check your calendar before scheduling a meeting, review relevant documents before answering a question, or analyze current data before making a recommendation.

The protocol's design specifically supports this dynamic context gathering through what we call its "three core primitives" - tools, resources, and prompts. These primitives provide the building blocks for sophisticated AI interactions that go far beyond simple text generation. We'll explore these in detail in the next section, but the key insight is that MCP enables AI to become an active participant in your workflows rather than just a passive advisor.

MCP represents a fundamental shift from isolated AI systems to connected, context-aware applications that can truly integrate with the digital world around us. It's not just solving today's integration problems, it's building the foundation for tomorrow's AI-native organizations. In the following section, we'll dive deeper into the technical foundation that makes this integration possible.

How MCP Works: The Technical Foundation

Now that we understand what MCP is and why it matters, let's peek under the hood to see how it actually works. Don't worry - I'll keep this accessible even if you're not a protocol engineer.

The Two-Layer Architecture

MCP's simplicity lies in its two-layer design that separates the "what" from the "how".

The Data Layer is where the actual conversation happens. It's built on JSON-RPC 2.0, which is just a fancy way of saying "a standardized way for computers to talk to each other." This layer handles all the important stuff: what capabilities each side has, what data is available, and what actions can be performed. It's like the actual conversation between two people - the content and meaning.

The Transport Layer is how the messages actually get delivered. It can use local connections (like talking to someone in the same room) or remote connections over the internet (like making a phone call). The beautiful thing is that the conversation stays the same regardless of how the messages are delivered.

MCP's Building Blocks

Here's where MCP gets really clever. Instead of trying to handle every possible type of interaction, it defines just three fundamental building blocks that can be combined to create any workflow you can imagine.

MCP Tools are executable functions - think of them as actions your AI can perform. Want to create a file? There's a tool for that. Need to query a database? Another tool. Send an email? Yet another tool. Each tool has a clear description of what it does and what parameters it needs, so the AI knows exactly how to use it.

MCP Resources are sources of information - files, database records, API responses, or any other data your AI might need to understand. Unlike tools, resources are read-only. They're like reference books that the AI can consult to get context and information.

MCP Prompts are reusable templates that help structure interactions. Think of them as conversation starters or frameworks that ensure consistent, high-quality interactions. They might include system instructions, examples of good responses, or templates for specific types of tasks.

How It All Comes Together

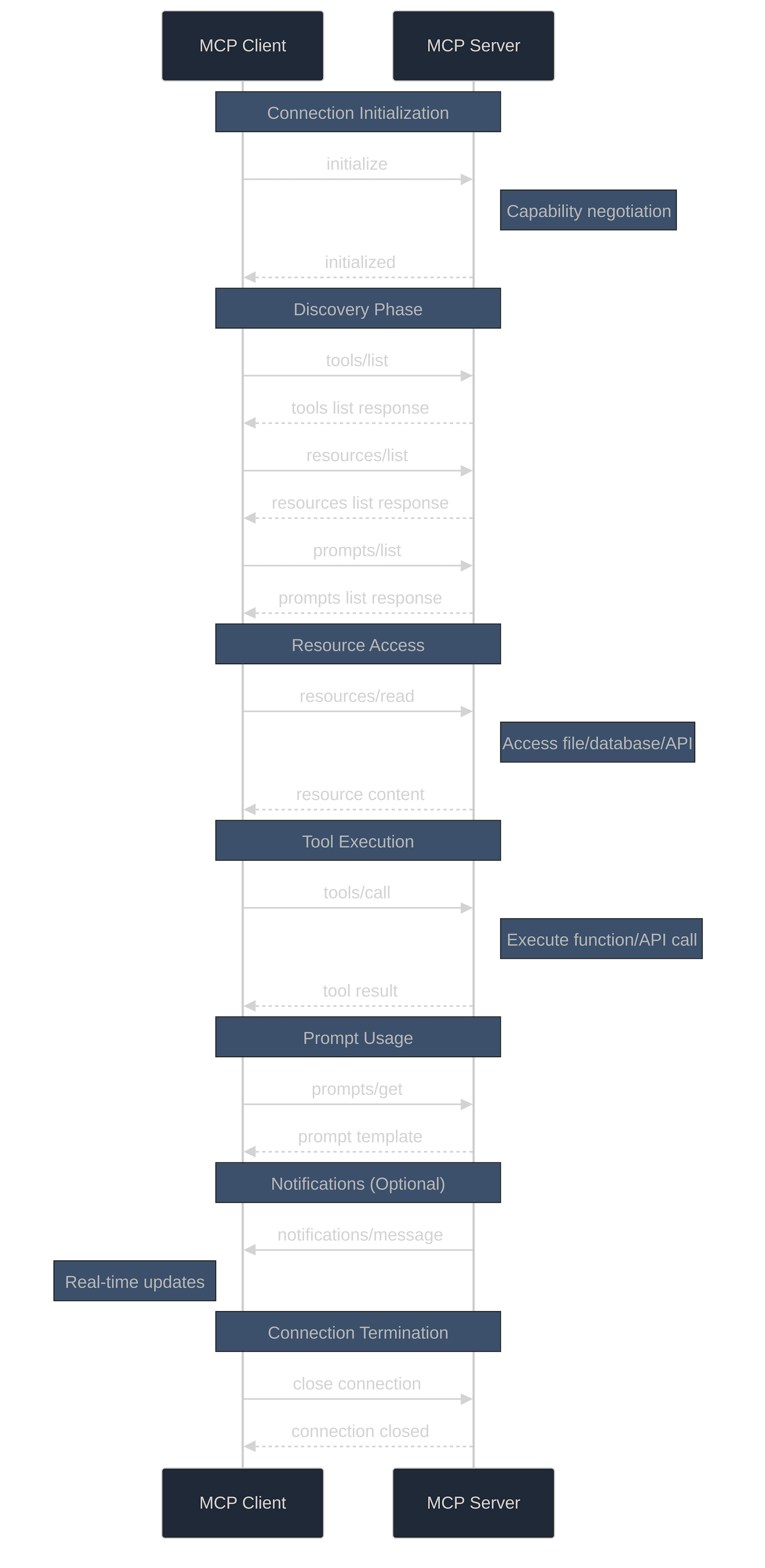

Here's where the sequence diagram above becomes really useful. When an MCP client connects to a server, they go through what I like to call the "discovery dance."

First, they shake hands and figure out what each other can do - this is the initialization phase. Then the client asks, "What tools do you have?" "What resources can you provide?" "What prompts are available?" The server responds with detailed lists of everything it offers.

This dynamic discovery is crucial because it means MCP servers can adapt their offerings based on context, permissions, or current system state. A database server might offer different tools to different users based on their access levels, or a file server might show different resources based on what's currently available.

When the AI actually needs to do something, it's as simple as calling a tool, reading a resource, or using a prompt. The server handles all the complexity of actually performing the action or retrieving the data, and returns the results in a standardized format.

Understanding MCP Servers and Clients

To truly grasp how MCP protocol works, it's essential to understand the relationship between what is an MCP server and what is an MCP client. This client-server architecture is what makes the entire system work seamlessly.

What is an MCP server in AI? An MCP server is essentially a bridge that exposes specific data sources, tools, or capabilities to AI systems through the standardized MCP protocol. When you add MCP server to your setup, you're creating a gateway that allows AI applications to access and interact with particular resources or services. For example:

- A Google Drive MCP server exposes your files and folders

- A GitHub MCP server provides access to repositories, issues, and pull requests

- A database MCP server allows querying and data manipulation

- A Slack MCP server enables reading messages and posting updates

How does MCP server work? Each MCP server implements the three core primitives (tools, resources, and prompts) specific to its domain. A file system server might offer tools for creating and deleting files, resources for reading file contents, and prompts for common file operations. The server handles all the complexity of authentication, data access, and error handling, presenting a clean, standardized interface to AI clients.

What is an MCP client? An MCP client is the AI application or system that consumes services from MCP servers. Popular examples include Claude Desktop, which acts as an MCP client when connecting to various servers, and custom AI applications built by developers. The client is responsible for discovering available servers, understanding their capabilities, and making appropriate requests.

MCP Architecture Components

When examining what are the main components of the MCP architecture, we see a well orchestrated system:

- Transport Layer: Handles the actual message delivery using protocols like HTTP or local IPC

- Protocol Layer: Implements the JSON-RPC 2.0 based communication standard

- Discovery System: Allows dynamic capability negotiation between clients and servers

- Security Framework: Manages authentication, authorization, and secure communication

- Primitive System: The tools, resources, and prompts that form the functional core

This architecture enables connecting AI systems to virtually any data source or service through a consistent, standardized approach.

Why This Architecture Matters

This design might seem simple, but it's incredibly powerful. By standardizing just these three types of interactions, MCP can handle virtually any integration scenario while keeping the complexity manageable. It's like how LEGO blocks - despite being simple - can be combined to build incredibly complex structures.

The stateful nature of MCP connections also enables sophisticated workflows. The client and server can maintain context across multiple interactions, enabling complex multi-step processes that would be difficult to achieve with stateless approaches.

Most importantly, this architecture scales beautifully. Whether you're connecting to a simple file system or a complex enterprise application, the patterns remain the same. This consistency makes it much easier for developers to learn and use MCP effectively.

Real-World Benefits of MCP

Let me tell you about the moment everything clicked for Alexey, the developer whose story opened this article. After implementing MCP, his writing workflow transformed completely. "A writing project that previously took me days of back-and-forth can now be completed in hours," he says. "Research tasks that required tedious manual organization now flow naturally as Claude helps me collect, organize, and synthesize information directly into my documents."

This isn't just about productivity gains - though those are substantial. It's about fundamentally changing the relationship between humans and AI. As Alexey puts it, "Rather than seeing LLMs as tools to generate snippets of content, I now view them as collaborators in my creative process - partners who can see what I'm working on and contribute meaningfully to its development."

Industry experts have identified six key ways MCP transforms AI integration, and each one addresses a real pain point that developers face every day.

1. Visibility. Finally, AI Can See Your Stack

Before MCP, AI systems were like brilliant consultants who had to work blindfolded. As one technical analyst puts it, "LLMs can't be useful if they can't see your stack". MCP changes this by giving AI real-time access to your infrastructure, data, and tools. Suddenly, your AI assistant can see customer data, project status, system metrics, and business processes - enabling contextually relevant assistance that actually understands your situation.

2. Structure. Bringing Order to Chaos

Enterprise software is notoriously complex for AI to understand, sometimes inconsistent or lack[ing] the metadata necessary for intelligent consumption". MCP addresses this by providing a standardized way for services to describe themselves to AI systems. It's not just about having APIs, it's about having APIs that AI can understand and use intelligently.

3. Real-Time Workflows. No More Polling and Scripting

Traditional AI integrations often require polling, scripting or intermediary APIs just to trigger simple actions. With MCP, agents maintain active connections to services, enabling immediate response to changing conditions. An AI assistant in your development environment can instantly query telemetry data, look up configurations, or call microservices without manual reconfiguration.

4. Flexibility Without Lock-In

MCP is AI vendor-agnostic by design and doesn't lock you into a specific LLM provider, AI toolchain or cloud vendor. You can mix and match AI systems, swap out vendors over time, and adapt to new technologies without breaking your integrations. It's like having a universal adapter that works with any AI system.

5. Built-In Security and Governance

Unlike traditional approaches where security is an afterthought, MCP integrates security into the protocol itself. Each MCP server defines exactly what it exposes and under which credentials, enabling fine-grained access control that aligns with existing enterprise security policies. AI agents inherit the same permission models you already use across your systems.

6. Future-Proofing for an AI-Native World

MCP prepares organizations for a future where IDEs become copilots and terminals are replaced by natural language. By providing standardized access to infrastructure, MCP enables AI to become a true partner in software development and business operations rather than just an external tool.

The Bottom Line - Measurable Impact

Organizations adopting MCP report dramatic improvements across key metrics. Development teams see 70-80% reductions in integration development time. Maintenance costs drop by 50-60% due to standardized approaches. Most importantly, the expanded capabilities enable use cases that would be prohibitively expensive with custom integrations, leading to higher ROI on AI initiatives.

But perhaps the most significant benefit is the one Alexey experienced: MCP doesn't just make AI more efficient - it makes AI more collaborative, more contextual, and more genuinely useful in real-world scenarios.

MCP in Action: Real Stories from the Trenches

Here's where the rubber meets the road. Let me share some real stories from developers and professionals who've implemented MCP in their daily workflows. These aren't theoretical use cases - they're actual implementations that are saving people hours every day and opening up possibilities that simply weren't practical before.

YouTube MCP and Content Repurposing

Take Sarah, a technical content creator who was drowning in the complexity of repurposing content across platforms. She'd create a detailed YouTube video about a new technology, then spend hours manually extracting key points, reformatting for different social media platforms, and ensuring consistent messaging across channels.

With MCP, Sarah's workflow transformed overnight. She now uses the YouTube MCP integration to automatically transcribe her videos and extract key insights. The AI analyzes the transcription, identifies memorable quotes and engaging segments, then generates platform-specific content: LinkedIn posts emphasizing professional insights, Twitter threads breaking down complex concepts, and Instagram captions focusing on visual elements.

"What used to take me an entire afternoon now happens in about 15 minutes," Sarah explains. "But it's not just about speed - the AI maintains my voice and style across platforms while adapting the content for each audience. It's like having a brilliant assistant who really understands my brand."

The process is remarkably simple. Sarah asks her AI assistant: "Can you analyze my latest YouTube video and create social media content for LinkedIn, Twitter, and Instagram?" The AI accesses the video through the YouTube MCP server, processes the content, and generates tailored posts for each platform. Sarah can then edit and refine the content before publishing, creating an efficient workflow that maximizes content value while minimizing manual effort.

MCP Server for Developer Docs

Remember Hailey, the data scientist who wrote about her MCP journey in Towards Data Science? She faced a problem that every developer knows intimately: keeping up with constantly changing documentation. "How many times have you watched Claude confidently spit out deprecated code or reference libraries that haven't been updated since 2021?" she asks.

Hailey's solution was elegant: she built a custom MCP server that provides real-time access to the latest documentation from AI libraries like LangChain, OpenAI, MCP itself, and LlamaIndex. This server uses real-time search, scrapes live content, and gives her AI assistant fresh knowledge on demand.

The implementation process was surprisingly straightforward. Using the official MCP SDK from Anthropic, Hailey created a server that could search documentation sites, extract relevant content, and provide it to Claude in a structured format. The result? Her AI assistant now provides accurate, up-to-date code examples and implementation guidance based on the latest documentation.

MCP Server for Cold Email Outreach

Marcus, a B2B sales professional, used to spend entire days researching prospects and crafting personalized outreach messages. His process was manual and time-consuming: research each company individually, find contact information, understand their business challenges, and craft personalized messages that would resonate with decision-makers.

MCP changed everything. Marcus now uses a workflow that combines multiple MCP servers to automate the entire research and outreach process. The Perplexity MCP integration researches each company's website, gathering information about their business model, recent news, and potential pain points. The Firecrawl MCP server extracts contact information from company websites. Finally, the Email Marketing MCP server helps compose and send personalized outreach messages.

The entire process, which previously took Marcus several days, now happens in hours. "I can research 50 companies and create personalized outreach campaigns in the time it used to take me to research five," Marcus explains. "But the quality hasn't suffered - if anything, it's improved because the AI can process more information than I ever could manually."

MCP for Automated Monitoring and Research

Dr. Elena Rodriguez, a market researcher, faced the challenge of staying current with rapidly evolving industries while conducting thorough competitive analysis. Her traditional approach involved manually searching multiple sources, copying information into spreadsheets, and trying to create insights from disparate data sources.

With the Perplexity MCP integration, Elena's research process became dramatically more efficient. She can now ask her AI assistant to monitor specific topics, gather information from multiple sources, and provide comprehensive briefings on new developments. The AI searches for recent developments, gathers competitive intelligence, researches market trends, and compiles detailed reports on complex topics.

"It's like having a team of research assistants working around the clock," Elena says. "I can ask for a comprehensive analysis of emerging trends in renewable energy, and within minutes I have a detailed report with current data, competitive landscape analysis, and strategic insights."

The AI doesn't just gather information - it synthesizes findings from multiple sources, identifies patterns and trends, and presents insights in formats tailored to Elena's needs. Whether she needs an executive summary for stakeholders or a detailed technical analysis for her team, the AI adapts its output accordingly.

MCP for Project Managers

James, a project manager at a software company, struggled with keeping track of project status across multiple tools and platforms. His team used Slack for communication, GitHub for code management, Notion for documentation, and various other tools for different aspects of project management. Getting a comprehensive view of project status required manually checking multiple systems and correlating information across platforms.

MCP solved this coordination challenge by enabling James's AI assistant to access all these systems through standardized interfaces. Now, James can ask for project status updates and receive comprehensive reports that pull information from all relevant systems.

"Give me a status update on the mobile app project," James might ask. The AI then queries the GitHub MCP server for recent commits and pull requests, checks the Slack MCP server for relevant team discussions, reviews the Notion MCP server for documentation updates, and synthesizes all this information into a coherent status report.

The AI can identify potential issues by correlating information across systems. For example, it might notice that there's been a lot of discussion in Slack about a particular feature, but no corresponding code commits in GitHub, suggesting a potential bottleneck or technical challenge.

MCP for Content Marketing

Lisa, a content strategist for a global company, needed to create audio versions of her written content for accessibility and engagement purposes. The traditional process involved either hiring voice actors (expensive and time-consuming) or using low-quality text-to-speech tools that didn't match her brand's voice.

The Eleven Labs MCP integration provided an elegant solution. Lisa can now convert her written content into high-quality audio with consistent tone and style. The AI maintains her brand voice across different content formats while adapting the presentation for the audio medium.

"I can take a 3,000-word blog post and have a professional-quality audio version in minutes," Lisa explains. "The AI even adapts the content for audio consumption, adding natural pauses, emphasizing key points, and making the content more engaging for listeners."

This capability has opened up new content distribution channels for Lisa's company. They now offer podcast versions of their blog content, audio summaries for busy executives, and accessible content for visually impaired users - all generated efficiently through the MCP-enabled workflow.

Filesystem MCP Server

Alex, a freelance developer, worked on multiple client projects simultaneously, each with its own file structure, naming conventions, and organization requirements. Finding specific files or understanding project organization often consumed significant time, especially when switching between projects or returning to older work.

The file management capabilities enabled by MCP transformed Alex's workflow. Using MCP servers that integrate with Google Drive, OneDrive, and local file systems, Alex's AI assistant can now intelligently organize files based on content analysis rather than just metadata.

"I can ask my AI to organize all the files from the Johnson project by development phase, and it actually reads the files to understand what they are and where they belong," Alex explains. "It's not just moving files around based on names - it's understanding the content and creating logical organization structures."

The AI can scan directories, analyze file contents, group related files together, identify duplicates, create logical folder structures, and even flag files that might need attention or updating. This intelligent organization saves Alex hours of manual file management and helps maintain consistent organization standards across different projects.

PostgreSQL MCP Server for Data Analysis

Maria, a database administrator for a mid-sized company, spent significant time writing complex SQL queries for various stakeholders who needed data but didn't have the technical skills to extract it themselves. This created a bottleneck where business users had to wait for Maria's availability to get the data they needed.

The Notion and PostgreSQL MCP integrations enabled Maria to create a natural language interface for database queries. Business users can now ask questions in plain English, and the AI translates these into appropriate SQL queries, executes them safely, and presents the results in understandable formats.

"Instead of getting requests like 'Can you pull the sales data for Q3 with regional breakdowns?' and then spending time writing queries, users can now ask the AI directly," Maria explains. "The AI understands the database structure, writes appropriate queries, and presents the results in formats that make sense to business users."

The system includes appropriate safeguards to ensure data security and prevent unauthorized access. The AI respects existing permission structures and only allows queries that the requesting user would normally be authorized to perform.

MCP for DevOps

Tom, a DevOps engineer, managed infrastructure across multiple cloud providers and needed to coordinate deployments, monitor system health, and respond to incidents across diverse systems. The traditional approach required switching between multiple dashboards, command-line tools, and monitoring systems.

With GitHub and infrastructure MCP integrations, Tom's AI assistant can now manage repositories, track issues, coordinate development activities, and monitor system health through natural language instructions. Tom can ask the AI to create issues, update pull requests, manage branches, check system status, and even perform routine maintenance tasks.

The AI can also automate documentation generation, analyzing code changes and automatically updating documentation, creating release notes, and generating project summaries. This automation helps maintain project documentation without requiring significant manual effort from the development team.

MCP for Business Analysts

Rachel, a business analyst, needed to combine data from multiple sources to create comprehensive reports for executive leadership. Her traditional process involved manually extracting data from various systems, cleaning and formatting it in spreadsheets, and creating presentations that synthesized insights from multiple sources.

MCP enabled Rachel to create automated workflows that pull data from CRM systems, financial databases, marketing platforms, and operational tools, then summarize this information into executive-ready reports. The AI handles data extraction, cleaning, analysis, and presentation formatting.

"I can ask for a comprehensive business performance report, and the AI pulls current data from all our systems, identifies trends and anomalies, and creates a presentation that's ready for the executive team," Rachel explains. "What used to take me two days of manual work now happens in a couple of hours."

The AI doesn't just compile data - it provides analytical insights, identifies correlations between different metrics, flags unusual patterns that might require attention, and presents information in formats optimized for executive decision-making.

Natural Language Control

What's remarkable about all these use cases is how they follow a similar pattern: complex, multi-system workflows that previously required technical expertise and significant manual effort can now be triggered and managed through simple, natural language instructions.

The power of MCP lies not just in connecting AI to external systems, but in making sophisticated automation accessible to users regardless of their technical background. MCP is like providing AI with the 'hands' to perform actions using the natural language.

By enabling natural language control over complex technical operations, MCP makes sophisticated automation accessible to users across all skill levels and opens up new possibilities for productivity and efficiency improvements.

Technical Deep Dive into MCP

How to Use MCP

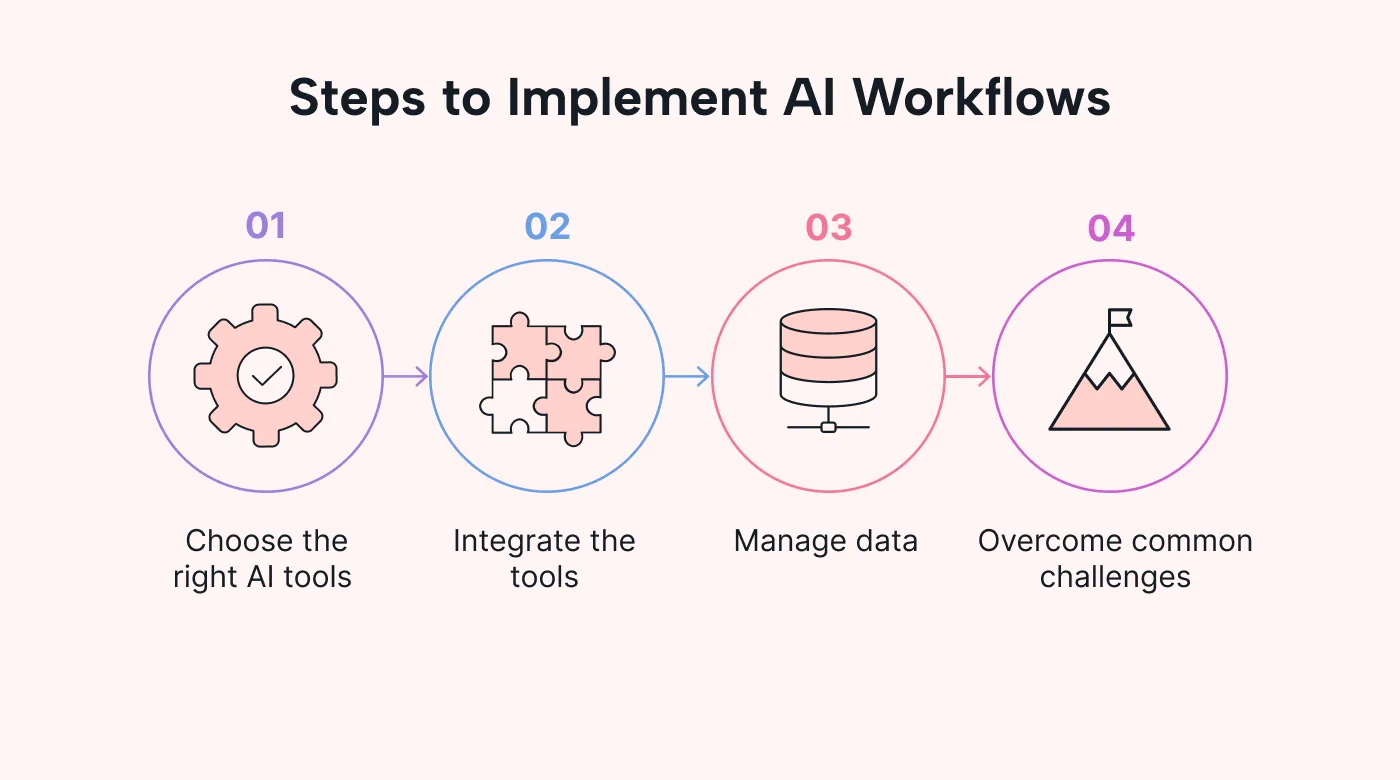

Understanding how to use MCP effectively requires grasping both the technical implementation and the practical workflow. The process typically follows these steps:

- Choose your approach: Start with existing MCP servers for common services, or build custom servers for specialized needs

- Set up the client: Configure your AI application (like Claude Desktop) to connect to MCP servers

- Test the connection: Use MCP's built-in discovery mechanisms to verify server capabilities

- Implement workflows: Design natural language interactions that leverage the connected resources and tools

MCP JSON and Communication Protocol

At its core, MCP uses MCP JSON formatting based on JSON-RPC 2.0 for all communication. This choice provides several advantages:

- Standardization: JSON-RPC 2.0 is a well-established protocol with robust tooling and libraries

- Simplicity: The message format is human-readable and easy to debug

- Flexibility: JSON's structure accommodates complex data types while remaining lightweight

- Interoperability: Works seamlessly across different programming languages and platforms

A typical MCP JSON message might look like this:

{

"jsonrpc": "2.0",

"method": "tools/call",

"params": {

"name": "search_files",

"arguments": {

"query": "project status",

"path": "/documents"

}

},

"id": 1

}

MCP Transports: Flexible Connection Options

MCP transports provide the flexibility to connect AI systems and data sources through various communication channels:

- HTTP Transport: Ideal for web-based services and remote connections

- Local Process Transport: Perfect for desktop applications and local integrations

- WebSocket Transport: Enables real-time, bidirectional communication

- Custom Transports: The protocol allows for specialized transport mechanisms as needed

This transport flexibility means you can use the same MCP protocol whether you're connecting to a local database, a cloud service, or a custom enterprise system.

How to Test MCP Server

Learning how to test MCP server implementations is crucial for reliable deployments. The MCP ecosystem provides several testing approaches:

MCP Inspector: A built-in debugging tool that allows developers to:

- Inspect server capabilities and available resources

- Test tool calls and resource access in real-time

- Debug communication issues and protocol compliance

- Validate server responses and error handling

Unit Testing: Standard testing frameworks can verify individual server components:

- Test resource discovery and access

- Validate tool execution and error handling

- Verify authentication and authorization mechanisms

- Check protocol compliance and message formatting

Integration Testing: End-to-end testing ensures complete workflow functionality:

- Test client-server communication flows

- Validate real-world usage scenarios

- Check performance under various load conditions

- Verify security and access control mechanisms

MCP vs. Alternative Approaches

To fully appreciate MCP's value, it's helpful to understand how it compares to other AI integration approaches.

RAG vs MCP

RAG vs MCP represents a common comparison, but they actually solve different problems:

- RAG (Retrieval-Augmented Generation) focuses on enhancing AI responses by retrieving relevant information from knowledge bases. It's primarily about improving AI's knowledge and context.

- MCP focuses on enabling AI to take actions and access live data across multiple systems. It's about expanding AI's capabilities and reach.

Many implementations use both approaches together - RAG for knowledge enhancement and MCP for system integration. MCP can also facilitate RAG workflows by providing access to dynamic data sources.

A2S vs MCP

A2S vs MCP (Agent-to-System vs Model Context Protocol) highlights different architectural philosophies:

- A2S approaches typically focus on direct agent-to-system connections with custom protocols

- MCP provides a standardized protocol that works across different AI systems and data sources

MCP's standardization advantage becomes clear in complex environments with multiple AI systems and data sources.

MCP Sampling: Enabling Agentic AI Behaviors

MCP sampling represents one of the most sophisticated features of the protocol, fundamentally changing how MCP servers can leverage AI capabilities. Unlike traditional approaches where AI clients always initiate requests, MCP sampling flips this relationship - allowing MCP servers to request LLM completions ("generations") from language models via clients. This capability enables what developers call "agentic behaviors," where AI systems can make intelligent decisions and perform multi-step reasoning within the context of server operations.

Understanding how MCP server work with sampling reveals the protocol's true power. When an MCP server encounters a task that requires AI reasoning - such as analyzing data, making decisions, or generating structured outputs - it can send a sampling/createMessage request to the client. The client then coordinates with the user and the LLM to provide the requested completion back to the server. This creates a nested AI interaction where LLM calls occur inside other MCP server features, enabling sophisticated workflows that were previously impossible.

The sampling process implements a crucial "human-in-the-loop" design that maintains user control and trust. When a server requests sampling, the client presents the exact prompt and context to the user for review and approval. The user can edit, approve, or reject the request before it's sent to the LLM. Similarly, when the LLM generates a response, the user reviews and can modify the completion before it's returned to the server. This dual checkpoint system ensures that users maintain oversight over what the AI sees and generates, addressing critical trust and safety concerns in automated AI workflows.

MCP JSON formatting for sampling requests follows a sophisticated structure that supports multi-modal interactions. The protocol supports text, image, and audio content types, allowing servers to request AI analysis of diverse data formats. Model preferences use an innovative abstraction system that combines capability priorities (cost, speed, intelligence) with optional model hints, enabling servers to express their needs without being tied to specific AI providers. For example, a server might specify high intelligence priority with a "claude-3-sonnet" hint, allowing the client to map this to equivalent models from different providers like Gemini or GPT-4.

The practical applications of MCP sampling extend far beyond simple text generation. Servers can use sampling for intelligent decision-making based on available data, creating structured outputs in specific formats, completing multi-step workflows that require reasoning, and analyzing external data to determine appropriate responses. A code review MCP server, for instance, might use sampling to analyze code quality, suggest improvements, and even generate refactored code - all while maintaining human oversight at each step. This capability transforms MCP servers from simple data bridges into intelligent agents that can enhance and automate complex workflows through AI-powered reasoning.

MCP Use Cases

The MCP use cases extend far beyond simple data access to include:

- Multi-modal AI workflows: Combining text, image, and data processing

- Cross-platform automation: Orchestrating actions across different software ecosystems

- Real-time decision making: Enabling AI to respond to live data and changing conditions

- Collaborative AI systems: Multiple AI agents working together through shared MCP infrastructure

The next section will explore who should consider adopting MCP and how different types of organizations and professionals can benefit from this technology.

Who Should Use MCP?

Here's the thing about MCP: it's not just for hardcore developers or AI researchers. The beauty of this protocol is that it makes sophisticated AI integration accessible to anyone who works with digital tools - which, let's be honest, is pretty much everyone these days.

For Developers and Engineers

If you're building AI applications, MCP is a game-changer. Instead of spending weeks building custom integrations for each data source, you can leverage existing MCP servers or build new ones using standardized patterns. It's like having a universal adapter for every API you'll ever need.

The developer community is already embracing MCP enthusiastically. Major development tools like Zed, Replit, Codeium, and Sourcegraph are integrating MCP support, recognizing that it represents the future of AI-assisted development.

For Business Professionals: AI That Actually Helps

You don't need to code to benefit from MCP. If you use tools like Slack, Google Drive, Notion, or any other business software, MCP-enabled AI assistants can help you automate workflows that currently eat up hours of your day.

Think about it: how much time do you spend copying information between systems, searching for files, or manually coordinating tasks across different platforms? MCP makes all of that automatable through simple conversations with AI.

Strategic Advantage For Organizations

For companies, MCP represents a strategic opportunity to build AI-native operations without vendor lock-in. You can start with one AI provider and switch to another without rebuilding your entire integration infrastructure. It's future-proofing for an AI-driven world.

Early adopters like Block and Apollo are already seeing the benefits, using MCP to enhance their platforms and provide better AI-powered experiences to their users.

When Organizations Should Consider MCP Adoption

Beyond individual roles, certain organizational characteristics and situations make MCP adoption particularly beneficial. Organizations with multiple AI initiatives or projects can benefit significantly from MCP's standardized approach, as it enables knowledge and infrastructure sharing across different teams and projects.

Companies that work with diverse data sources or have complex integration requirements are natural candidates for MCP adoption. The protocol's ability to abstract away integration complexity becomes more valuable as the number and diversity of data sources increases. Organizations that have struggled with the maintenance burden of custom integrations often find that MCP provides significant operational benefits.

Startups and organizations that need to move quickly can benefit from MCP's ability to accelerate AI development timelines. The protocol enables rapid prototyping and iteration by eliminating the need to build custom integrations for each experiment or feature. This acceleration can be particularly valuable in competitive markets where time-to-market is critical.

Enterprise organizations with strict security and governance requirements can benefit from MCP's built-in support for authentication, authorization, and auditing. The protocol's standardized approach to security makes it easier to implement consistent security policies across multiple AI applications and data sources.

Organizations that want to avoid vendor lock-in or maintain flexibility in their technology choices should consider MCP adoption as a strategic investment. The protocol's vendor-agnostic design provides protection against technology obsolescence and enables organizations to adapt to changing technology landscapes without rebuilding their integration infrastructure.

Evaluating MCP Readiness

Organizations considering MCP adoption should evaluate their readiness across several dimensions. Technical readiness includes having development teams with the skills needed to implement and maintain MCP-based solutions, as well as infrastructure that can support the protocol's requirements.

Organizational readiness involves having clear AI strategies and use cases that can benefit from improved integration capabilities. Organizations that are still in the early stages of AI exploration may want to start with simpler approaches before adopting MCP, while organizations with mature AI initiatives are often well-positioned to benefit from the protocol's advanced capabilities.

Cultural readiness is also important, as MCP adoption often requires changes to development processes and workflows. Organizations that are comfortable with open standards and collaborative development approaches tend to be more successful with MCP adoption than those that prefer proprietary or tightly controlled solutions.

The growing ecosystem of MCP servers and tools makes adoption increasingly accessible for organizations across different industries and technical maturity levels. As the protocol continues to evolve and gain adoption, the barriers to entry continue to decrease while the potential benefits continue to grow.

In the next section, we'll explore practical steps for getting started with MCP and provide guidance for organizations ready to begin their MCP journey.

Getting Started with MCP

Remember Hailey's story about finally understanding MCP? Her journey started exactly where yours can: with a simple question and a willingness to experiment. "If you're comfortable with Python and the command line, you'll be up and running in no time," she says. "And even if you're not - don't worry. We'll walk through everything step by step."

The Easiest Way to Start with Claude Desktop

The simplest way to experience MCP is through Claude Desktop, which has built-in MCP support. You can connect to existing MCP servers and start automating workflows within minutes. Here's what you need:

- Download Claude Desktop (if you haven't already)

- Choose your first integration - Start with something you use daily, like Google Drive or Slack

- Follow the setup guide - The official MCP documentation for Claude Desktop provides step-by-step instructions

- Start with simple requests - Ask Claude to help with tasks you normally do manually

Deeper Dive: Build Your Own MCP Server

If you want to go deeper, building a custom MCP server is surprisingly straightforward. Hailey built her documentation search server in just a few hours using the official MCP SDK.

The process involves:

- Installing the MCP SDK

- Defining what data or tools you want to expose

- Writing simple functions to handle requests

- Testing with the MCP Inspector (a handy debugging tool)

Start Small, Think Big

The key to successful MCP adoption is starting with one specific pain point in your workflow. Don't try to automate everything at once. Pick one repetitive task that annoys you daily - maybe it's searching for files, updating project status, or researching competitors - and build an MCP solution for that.

As Alexey discovered, "Rather than seeing LLMs as tools to generate snippets of content, I now view them as collaborators in my creative process." That shift in perspective often happens naturally once you start using MCP in your daily work.

Development Resources and Documentation

The MCP ecosystem provides comprehensive resources for developers and organizations getting started with the protocol. The official documentation includes detailed specifications, implementation guides, and example code that demonstrate best practices for both server and client development.

The MCP specification provides the authoritative reference for protocol implementation, including detailed descriptions of message formats, lifecycle management, and security considerations. This specification is essential reading for developers building custom servers or clients, as it ensures compatibility and interoperability with other MCP implementations.

SDK documentation provides language-specific guidance for implementing MCP servers and clients using the provided development kits. These resources include API references, tutorial content, and example implementations that demonstrate common patterns and best practices.

Community resources, including forums, GitHub repositories, and example implementations, provide additional support for developers working with MCP. The open-source nature of the protocol encourages community contribution and knowledge sharing, creating a growing repository of examples and best practices.

Best Practices for Initial Implementation

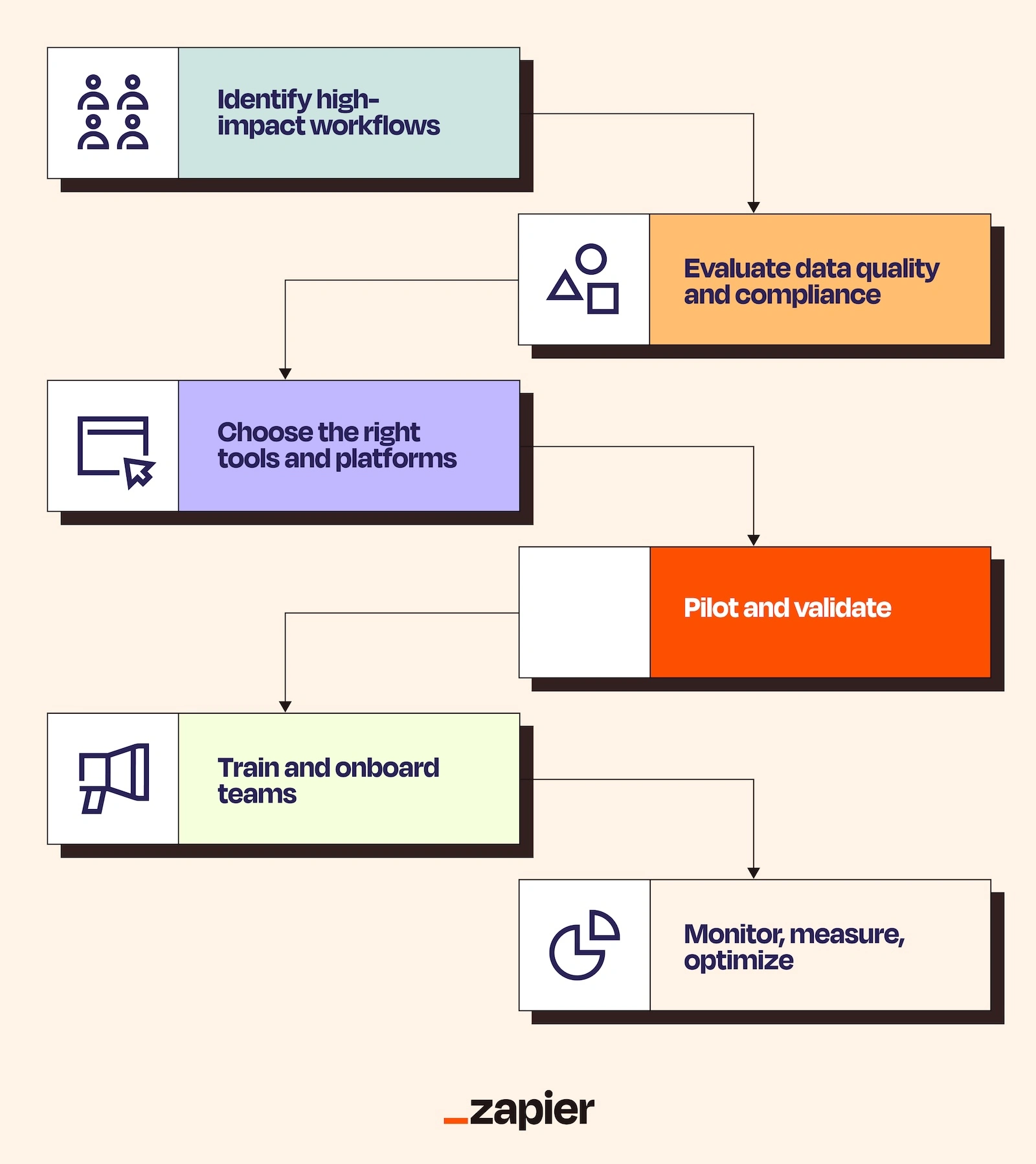

Successful MCP adoption typically follows certain patterns and best practices that help organizations maximize value while minimizing risk and complexity. These practices are based on the experiences of early adopters and the recommendations of the protocol developers.

Starting small and scaling gradually is one of the most important best practices for MCP adoption. Organizations should begin with simple use cases that provide clear value and can be implemented quickly, then expand to more complex scenarios as they gain experience and confidence with the protocol. This approach allows organizations to learn and adapt their implementation strategies based on real-world experience.

Focusing on high-impact use cases during initial implementation helps demonstrate MCP value to stakeholders and builds momentum for broader adoption. Organizations should identify integration challenges that are currently causing significant pain or limiting AI capabilities, as these represent the best opportunities for demonstrating MCP benefits.

Security and governance considerations should be addressed from the beginning of MCP implementation, even for proof-of-concept projects. Establishing appropriate authentication, authorization, and auditing practices early in the adoption process makes it easier to scale to production deployments and ensures that security requirements are met from the start.

Documentation and knowledge sharing are critical for successful MCP adoption, particularly in organizations where multiple teams or individuals will be working with the protocol. Creating internal documentation that captures implementation decisions, best practices, and lessons learned helps ensure that knowledge is preserved and can be shared across the organization.

Measuring Success and ROI

Organizations adopting MCP should establish clear metrics for measuring success and return on investment. These metrics help justify continued investment in MCP adoption and provide guidance for optimizing implementation strategies.

Development velocity metrics, such as time required to implement new integrations or add new data sources to AI applications, provide direct measures of MCP's impact on development productivity. Organizations typically see significant improvements in these metrics after adopting MCP, with some reporting 50-70% reductions in integration development time.

Maintenance overhead metrics, including time spent on integration maintenance, bug fixes, and updates, demonstrate MCP's operational benefits. The standardized approach provided by MCP typically reduces maintenance overhead significantly compared to custom integration approaches.

User adoption and satisfaction metrics help measure the business impact of MCP-enabled AI applications. These metrics might include user engagement with AI features, task completion rates, or user satisfaction scores. Improvements in these metrics indicate that MCP is enabling more valuable and usable AI applications.

Cost metrics, including development costs, infrastructure costs, and operational expenses, provide comprehensive measures of MCP's financial impact. Organizations should track both direct costs (such as development time and infrastructure) and indirect costs (such as maintenance overhead and opportunity costs) to get a complete picture of MCP's return on investment.

Planning for Scale

While organizations can start with MCP using simple implementations and existing servers, planning for scale from the beginning helps ensure that initial implementations can grow to support broader organizational needs. This planning involves considering both technical and organizational factors that affect scalability.

Technical scalability considerations include infrastructure requirements for supporting multiple MCP servers and clients, security and governance requirements for production deployments, and integration with existing development and deployment processes. Organizations should evaluate these requirements early in their adoption process to avoid architectural decisions that might limit future scalability.

Organizational scalability involves planning for broader adoption across multiple teams, projects, and use cases. This planning includes developing training and documentation resources, establishing governance processes for MCP server development and deployment, and creating support structures for teams adopting MCP.

The growing MCP ecosystem provides increasing support for scalable deployments, with new tools and services emerging to support enterprise adoption. Organizations should stay engaged with the MCP community to take advantage of these developments and contribute their own experiences and innovations back to the ecosystem.

Getting started with MCP represents an investment in the future of AI integration, providing organizations with the foundation needed to build sophisticated, scalable AI applications. The next section will explore what the future holds for MCP and how organizations can prepare for the continued evolution of AI integration technology.

The Future of AI Integration

The Model Context Protocol represents more than just a technical solution to current integration challenges - it provides a foundation for a fundamentally different relationship between AI systems and the digital infrastructure that powers modern organizations. Understanding the trajectory of MCP development and adoption helps organizations prepare for an AI-native future where intelligent systems become seamlessly integrated into all aspects of business operations.

Growing Industry Adoption

The early adoption patterns for MCP suggest that the protocol is gaining traction across diverse industries and use cases. Major technology companies and development tool providers are already integrating MCP support into their platforms, creating a positive feedback loop that accelerates ecosystem growth and adoption.

Block, a leading financial technology company, has emerged as one of the most vocal early adopters of MCP. As noted by their Chief Technology Officer, "open technologies like the Model Context Protocol are the bridges that connect AI to real-world applications, ensuring innovation is accessible, transparent, and rooted in collaboration" [1]. This endorsement from a major enterprise technology company signals the protocol's potential for widespread business adoption.

Development tool companies are also driving MCP adoption by integrating the protocol into popular development environments. Companies like Zed, Replit, Codeium, and Sourcegraph are "working with MCP to enhance their platforms - enabling AI agents to better retrieve relevant information to further understand the context around a coding task and produce more nuanced and functional code with fewer attempts" [1].

This adoption by development tool providers is particularly significant because it exposes MCP to the developer community that will ultimately drive broader adoption. As developers become familiar with MCP through their daily development workflows, they are more likely to advocate for its adoption in their organizations and contribute to the growing ecosystem of MCP servers and applications.

Expanding Ecosystem and Platform Support

The MCP ecosystem is expanding rapidly beyond its initial focus on AI applications to include broader platform support and integration capabilities. Cloud providers, enterprise software vendors, and specialized service providers are beginning to offer MCP-compatible interfaces, creating a more comprehensive ecosystem for AI integration.

This ecosystem expansion is driven by the recognition that MCP provides a competitive advantage for service providers who want to make their platforms more accessible to AI applications. By offering MCP interfaces, service providers can tap into the growing market for AI-powered automation and integration without needing to build custom AI capabilities themselves.

The standardized nature of MCP also enables the development of specialized tools and services that support MCP adoption. These include monitoring and management tools for MCP deployments, security and governance solutions designed specifically for MCP environments, and development tools that simplify the process of building and maintaining MCP servers.

Enterprise-Grade Capabilities

As MCP adoption moves from experimental use cases to production deployments, the protocol is evolving to support enterprise-grade requirements for security, scalability, and governance. These enhancements are essential for enabling MCP adoption in large organizations with complex compliance and operational requirements.

Security enhancements include more sophisticated authentication and authorization mechanisms, improved audit logging and monitoring capabilities, and better support for enterprise security policies and procedures. These improvements ensure that MCP can meet the stringent security requirements of regulated industries and large enterprises.

Scalability improvements focus on supporting high-volume deployments with multiple MCP servers and clients, efficient resource utilization, and robust error handling and recovery mechanisms. These enhancements enable MCP to support mission-critical applications and large-scale automation workflows.

Governance capabilities include better support for policy enforcement, compliance reporting, and operational oversight. These features help organizations maintain control over their AI integrations while enabling the flexibility and innovation that MCP provides.

AI-Native Development Paradigms

Perhaps the most significant long-term impact of MCP will be its role in enabling AI-native development paradigms where AI becomes a first-class participant in software development and business operations. This transformation goes beyond simply adding AI features to existing applications to fundamentally reimagining how humans and AI systems collaborate.

In AI-native development environments, developers will interact with code, infrastructure, and business systems through natural language interfaces powered by MCP. Instead of writing code to integrate with APIs, developers will describe their intentions to AI systems that can automatically discover and interact with relevant services through MCP interfaces.

This paradigm shift extends to business operations, where AI agents will continuously monitor systems, analyze data, and take corrective actions through MCP-enabled integrations. These agents will operate with a level of autonomy and sophistication that is difficult to achieve with traditional integration approaches, enabling new forms of intelligent automation and optimization.

The AI-native future enabled by MCP also includes more sophisticated human-AI collaboration patterns. Instead of using AI as an external tool, humans will work alongside AI systems that have deep understanding of business context and can proactively provide assistance and insights based on real-time data and system state.

Standardization and Interoperability

As MCP adoption grows, the protocol is likely to influence broader standardization efforts in AI integration and automation. The success of MCP demonstrates the value of open, standardized approaches to AI integration, which may encourage similar standardization efforts in related areas.

Interoperability between different AI platforms and integration protocols will become increasingly important as organizations adopt multiple AI tools and services. MCP's open design and vendor-agnostic approach position it well to serve as a foundation for broader interoperability standards that enable seamless integration across diverse AI ecosystems.

The protocol's influence may also extend to related standards for AI governance, security, and compliance. The patterns and practices developed for MCP deployments can inform broader industry standards for managing AI systems in enterprise environments.

Preparing for Continued Evolution

Organizations adopting MCP should prepare for continued evolution of the protocol and ecosystem. This preparation involves staying engaged with the MCP community, contributing to protocol development, and building internal capabilities that can adapt to changing requirements and opportunities.

Technical preparation includes building development and operational capabilities that can support evolving MCP requirements, maintaining flexibility in architecture and implementation decisions, and investing in training and knowledge development for teams working with MCP.

Strategic preparation involves developing organizational capabilities for evaluating and adopting new AI technologies, building partnerships with other organizations in the MCP ecosystem, and contributing to the broader community through open-source development and knowledge sharing.

The continued evolution of MCP will be driven by the needs and contributions of its user community. Organizations that actively participate in this evolution will be best positioned to benefit from new capabilities and influence the direction of protocol development.

Where We're Heading

The Model Context Protocol isn't just solving today's problems - it's building the foundation for tomorrow's AI-native world. MCP is the infrastructure that makes this transformation possible.

The Growing Movement

The momentum behind MCP is undeniable. Major companies like Block and Apollo are already integrating it into their systems. Development tools like Zed, Replit, Codeium, and Sourcegraph are building MCP support into their platforms. This isn't just early adoption - it's the beginning of a fundamental shift in how we think about AI integration.

As Block's CTO puts it, "open technologies like the Model Context Protocol are the bridges that connect AI to real-world applications, ensuring innovation is accessible, transparent, and rooted in collaboration" [1]. This vision of open, collaborative AI infrastructure is becoming reality faster than many expected.

What This Means for You

Whether you're a developer, business professional, or organizational leader, MCP represents an opportunity to be part of this transformation rather than just watching it happen. The early adopters - people like Alexey, Hailey, and the countless others building MCP solutions - are already seeing the benefits. They're not just more productive, they're working in fundamentally different ways.

The question isn't whether AI integration will become standardized - it's whether you'll be ready when it happens.

Conclusions

We've covered a lot of ground in this article - from the frustrating reality of fragmented AI integrations to the elegant solution that MCP provides. We've seen real stories from developers and professionals who've transformed their workflows, explored the technical foundation that makes it all possible, and looked at practical use cases that demonstrate MCP's potential.

But here's the most important takeaway: MCP isn't just a technical protocol. It's a new way of thinking about the relationship between humans and AI. Instead of AI being an isolated tool that we consult occasionally, MCP enables AI to become a true collaborator that understands our context, accesses our data, and helps us accomplish our goals.

The Three Key Insights

If you remember nothing else from this article, remember these three things:

First, the integration problem is real and it's holding back AI's potential. Every hour you spend copying data between systems, every custom integration you build, every workflow that breaks when an API changes - these aren't just minor inconveniences. They're symptoms of a fundamental problem that MCP solves.

Second, MCP makes sophisticated AI integration accessible to everyone. You don't need to be a protocol engineer or AI researcher to benefit from MCP. Whether you're automating content creation, streamlining research workflows, or building custom AI applications, MCP provides the foundation you need.

Third, the future is AI-native, and MCP is how we get there. The organizations and individuals who adopt MCP early will have a significant advantage as AI becomes more central to how we work. They'll have the infrastructure, experience, and mindset needed to thrive in an AI-driven world.

Next Steps

So where do you go from here? Start simple. Pick one workflow that frustrates you daily - maybe it's searching for files, updating project status, or researching competitors. Explore how MCP could help automate or streamline that process.

If you're a developer, try building a simple MCP server for something you care about. If you're a business professional, experiment with Claude Desktop and existing MCP integrations. If you're an organizational leader, start conversations about how MCP could transform your team's workflows.

The MCP ecosystem is growing rapidly, and there's never been a better time to get involved. The community is welcoming, the documentation is comprehensive, and the potential for impact is enormous.

The Bigger Picture

Ultimately, MCP represents something bigger than just a technical solution. It represents a vision of AI that's open, collaborative, and accessible. It's about building AI systems that enhance human capabilities rather than replacing them, that integrate seamlessly with our existing tools and workflows, and that adapt to our needs rather than forcing us to adapt to theirs.

As Alexey discovered, MCP changes how we think about AI: "Rather than seeing LLMs as tools to generate snippets of content, I now view them as collaborators in my creative process - partners who can see what I'm working on and contribute meaningfully to its development."

That transformation - from tool to collaborator - is what MCP makes possible. And it's just the beginning.

The future of AI integration is here. The question is: are you ready to be part of it?

References

[1] Anthropic. (2024). "Introducing the Model Context Protocol." Anthropic News. https://www.anthropic.com/news/model-context-protocol

[2] Model Context Protocol. (2024). "Official Documentation." https://modelcontextprotocol.io/